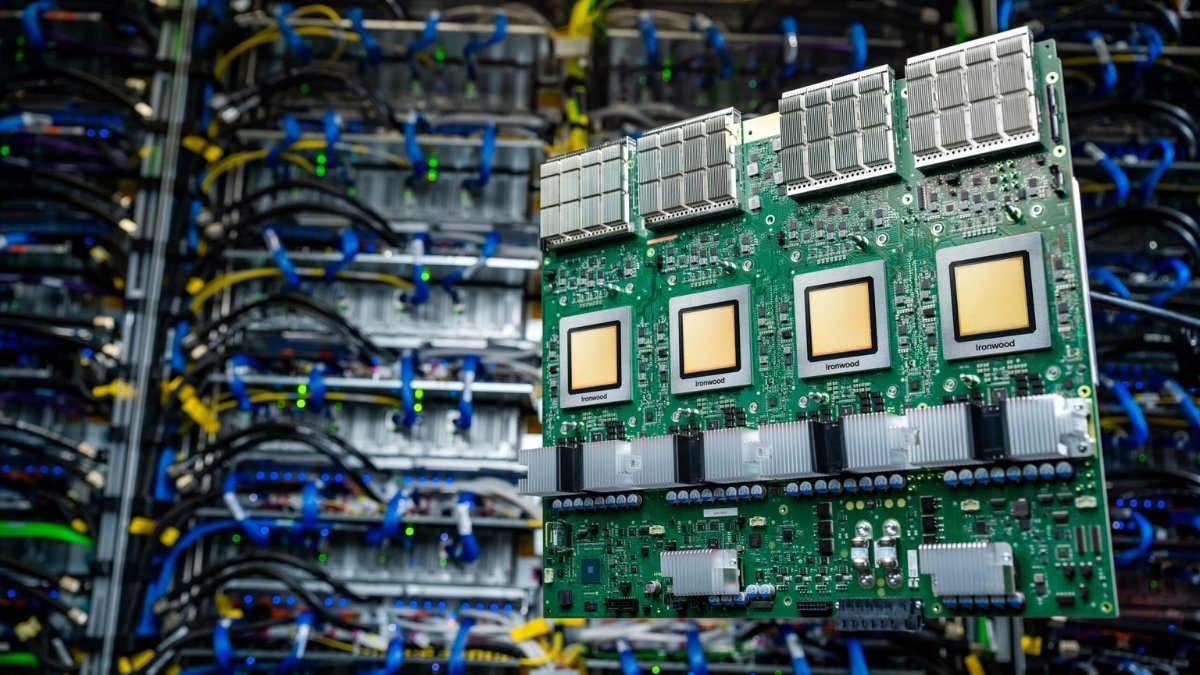

Google’s new Ironwood TPU: Do you know that its a seventh-generation AI chip? Currently, it is redefining the limits of machine intelligence. Its built fully in-house, and is four times faster than its predecessor. Moreover, this powerful Tensor Processing Unit enables large-scale model training and efficient energy use. With Ironwood, Google directly challenges Nvidia’s dominance in the rapidly growing AI hardware market.

(Credits: The Next Platform)

What is Ironwood TPU and How Its Four Times Smarter?

Ironwood TPU is Google’s seventh-generation Tensor Processing Unit, which is a powerful and custom-designed AI chip built entirely in-house to handle the world’s most demanding artificial intelligence workloads.

According to Google, the new Ironwood TPUs deliver more than four times the performance of the previous generation. They can be connected in pods of up to 9,216 chips, and allows users to train and scale the world’s largest and most data-intensive AI models.

- The architecture is engineered to eliminate data bottlenecks.

- It boosts efficiency for AI systems that demand enormous computing power.

- Google even says that this new chip design helps businesses run high-capacity workloads faster. This will be an advantage with improved energy efficiency, over traditional GPU-based infrastructure.

AI startup Anthropic is among the first major customers to adopt the technology. The company plans to use up to one million Ironwood TPUs to power its Claude model. This move highlights growing demand for specialised hardware optimised for large-scale AI applications.

Google’s Ironwood TPU Emerges as a Strong Rival to Nvidia in the AI Chip Race

The launch of Google’s Ironwood TPU marks its most decisive challenge yet to Nvidia’s dominance in the AI chip market. While most large language models and AI platforms still depend on Nvidia GPUs, Google’s custom-built silicon introduces a faster, more scalable, and cost-efficient alternative.

This milestone is the result of nearly a decade of innovation in Google’s Tensor Processing Unit (TPU) program. It is a part of the company’s larger mission to master every layer of the AI stack, from hardware and software to its expanding cloud infrastructure.

Cloud Growth and Future Investments

Alongside the Ironwood rollout, Google is introducing a series of upgrades aimed at making Google Cloud faster, cheaper and more flexible for enterprise clients. Here is how the company will move ahead with the future investments:

- The company reported $15.15 billion in cloud revenue for Q3 2025, up 34 percent year-on-year.

- By comparison, Microsoft Azure grew 40 percent in the same period, while Amazon Web Services rose 20 percent. Therefore, to meet surging AI demand, Google has raised its capital expenditure forecast for 2025 to $93 billion, up from $85 billion.

“We are seeing substantial demand for our AI infrastructure products, including TPU-based and GPU-based solutions,” CEO Sundar Pichai said. “It’s one of the key drivers of our growth, and we continue to invest heavily to meet that demand.”

As the AI arms race intensifies, Google’s Ironwood TPU could redefine how businesses build and deploy intelligent systems. This gives the tech giant a crucial edge in the next era of computing.

Trivia Facts About Google’s 7th-Gen Ironwood TPU and Cloud Expansion

Google’s Ironwood TPU could redefine how businesses build and deploy intelligent systems. It could give a crucial edge in the next era of computing for the tech giant.

|

You May Also Like to Read:

List of 9 Most Influential Dynasties in World History, Check Here!

List of 6 Countries Where Planes Cannot Land, Check Here!

List of 7 Most Famous Mathematicians, Check Here!

To see more of such stories, you can go ahead and add this site to your preferred sources by clicking here.

Comments

All Comments (0)

Join the conversation